For problems with a large number of variables, this process requires a large number of function evaluations. The solver evaluates n finite differences of this form by default, where n is the number of problem variables. Without AD, nonlinear solvers estimate gradients by finite differences, such as $(f(x+\delta e_1) - f(x))/\delta ,$ where $e_1$ is the unit vector (1,0.,0). What Good is Automatic Differentiation?ĪD lowers the number of function evaluations the solver takes. See Supported Operations on Optimization Variables and Expressions. The list of supported operators includes polynomials, trigonometric and exponential functions and their inverses, along with multiplication and addition and their inverses.

Matlab optimization toolbox software#

To use these rules of differentiation, the software has to have differentiation rules for each function in the objective or constraint functions. Currently, Optimization Toolbox uses only "backward" AD. The details of the process of calculating the gradient are explained in Automatic Differentiation Background, which describes the "forward" and "backward" process used by most AD software.

Matlab optimization toolbox code#

The way that solve and prob2struct convert optimization expressions into code is essentially the same way that calculus students learn, taking each part of an expression and applying rules of differentiation. For example, to minimize the test function $) = $ The problem-based approach to optimization is to write your problem in terms of optimization variables and expressions.

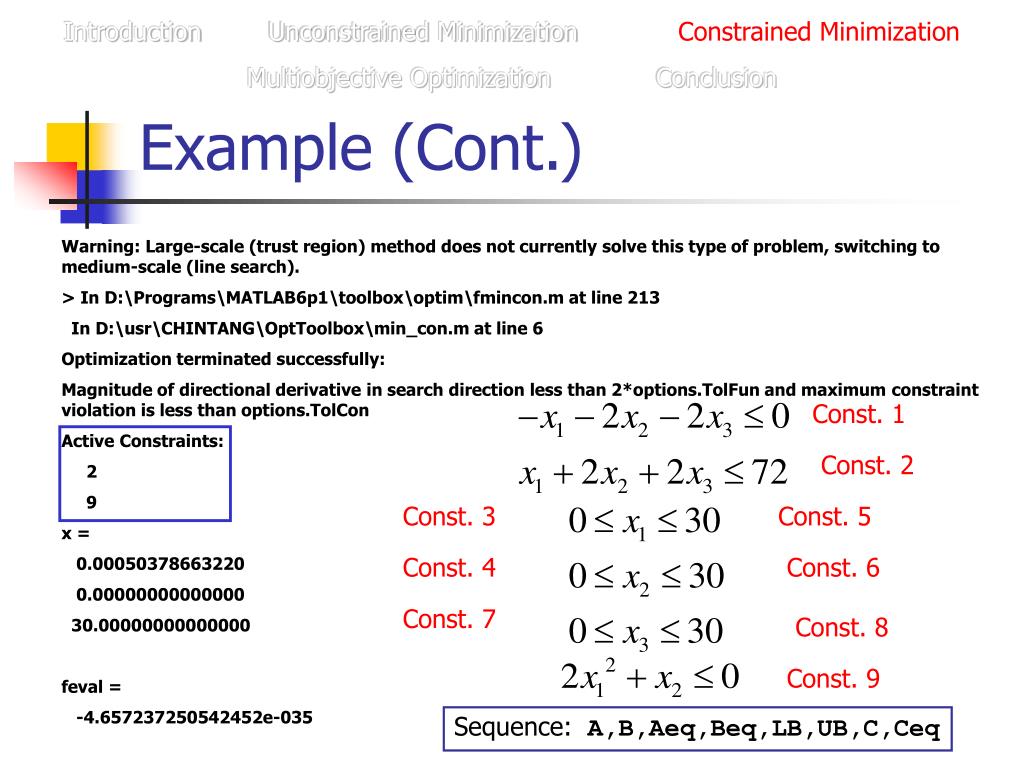

The variable values at the optimal solution are subject to (s.t.) both equality (=40) and inequality (>25) constraints. This problem has a nonlinear objective that the optimizer attempts to minimize. One example of an optimization problem from a benchmark test set is the Hock Schittkowski problem #71. , >=), objective functions, algebraic equations, differential equations, continuous variables, discrete or integer variables, etc. Mathematical optimization problems may include equality constraints (e.g. MATLAB can be used to optimize parameters in a model to best fit data, increase profitability of a potential engineering design, or meet some other type of objective that can be described mathematically with variables and equations. Optimization deals with selecting the best option among a number of possible choices that are feasible or don't violate constraints.

0 kommentar(er)

0 kommentar(er)